Interpretable ML with RAG

A RAG powered ChatBot for better ML interpretablility via Q&A.

TL;DR: Live Demo

Background

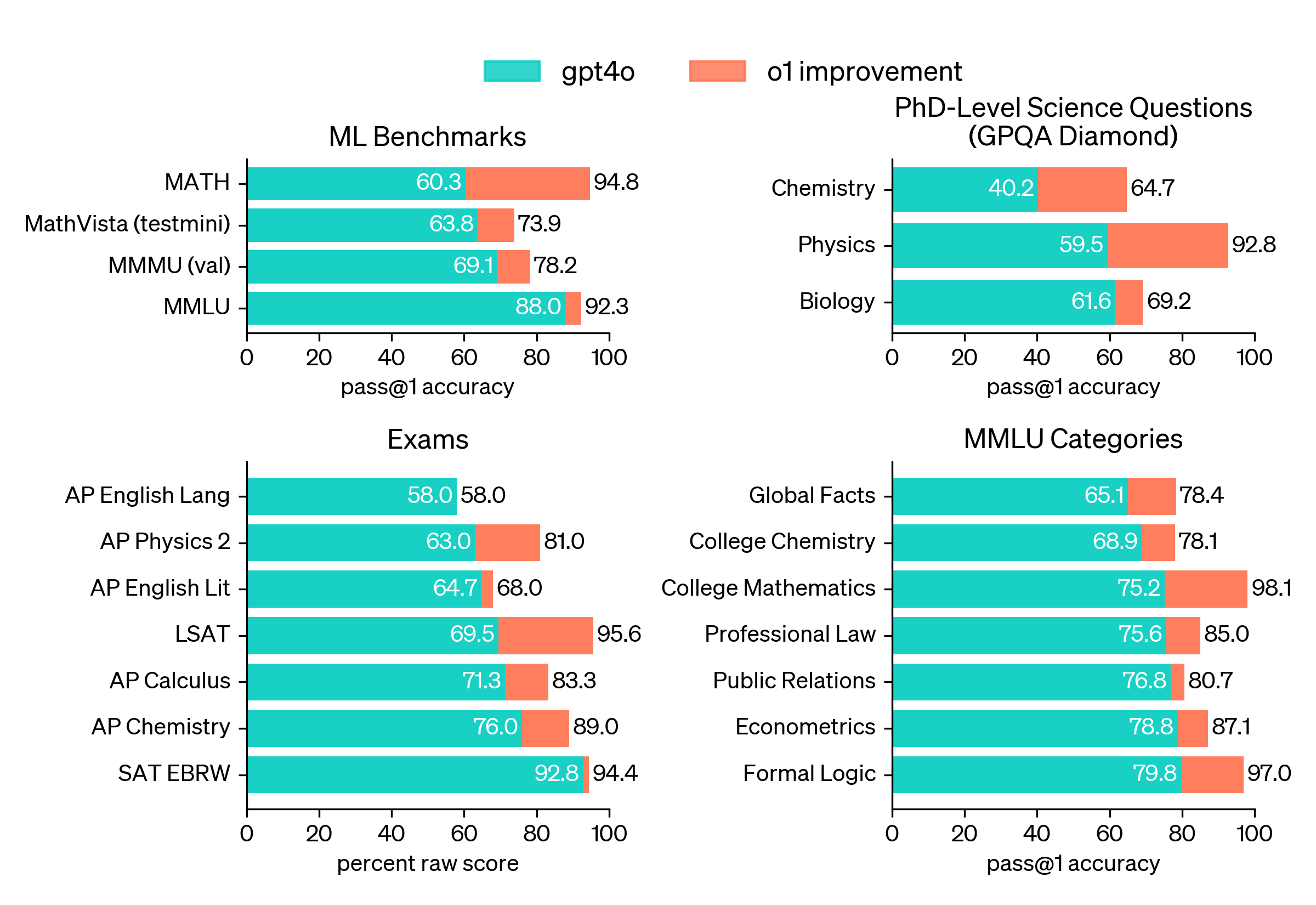

OpenAI o1 ranks in the 89th percentile on competitive programming questions (Codeforces), places among the top 500 students in the US in a qualifier for the USA Math Olympiad (AIME), and exceeds human PhD-level accuracy on a benchmark of physics, biology, and chemistry problems (GPQA).

Recent development of LLMs (GPT-o1) shows a promising capability of reasoning (source).

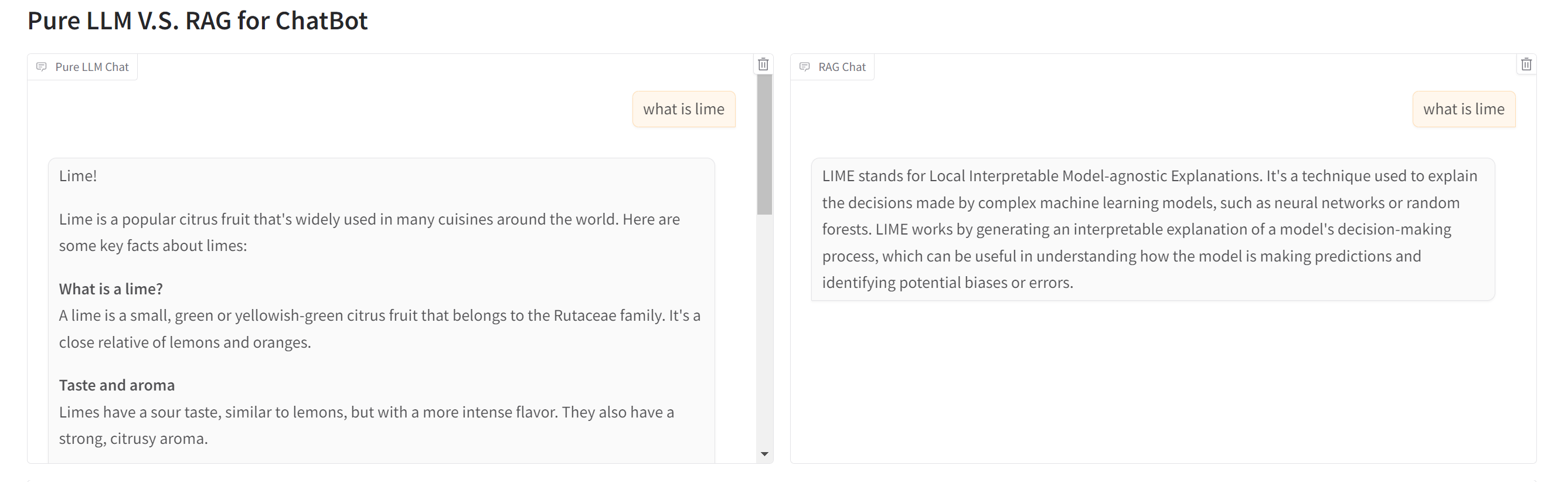

Unlike the previous LLMs which suffer from mathematical reasoning, this significant advancement inspired use to re-think the application of LLMs on machine learning interpretability, especially with the emergence of retrieval augmented generation (RAG) that addresses the issue of hallucination.

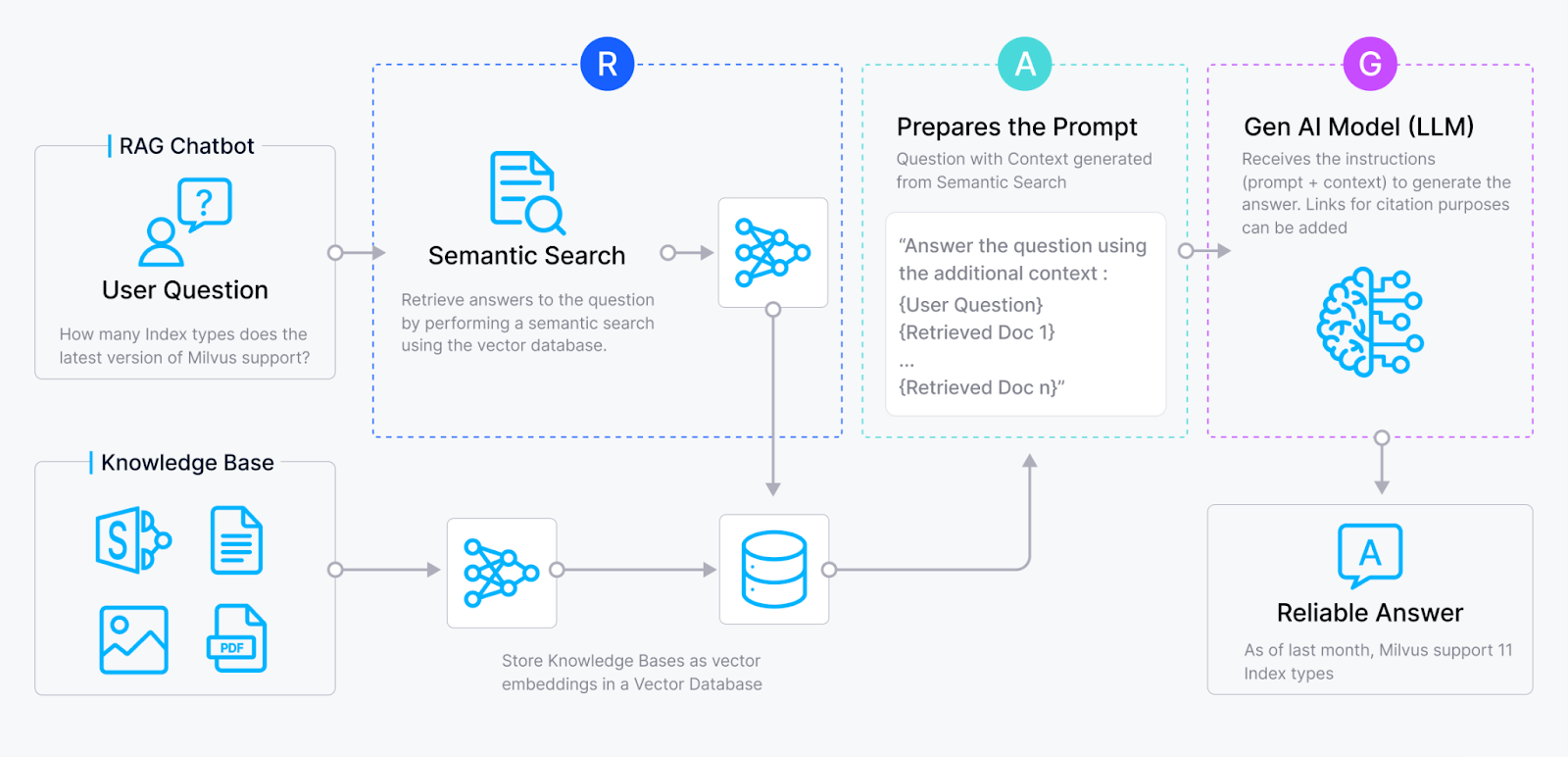

The RAG Workflow

As the name suggested, a RAG process contains three major steps, retrieval, augmentation, and generation. RAG uses two components to achieve these steps, retriever and generator. Retriever retrieves relevant information from knowledge bases and feed to generator. Generator then uses the information as context for a better generation.

The typical RAG workflow (source).

System Implementation

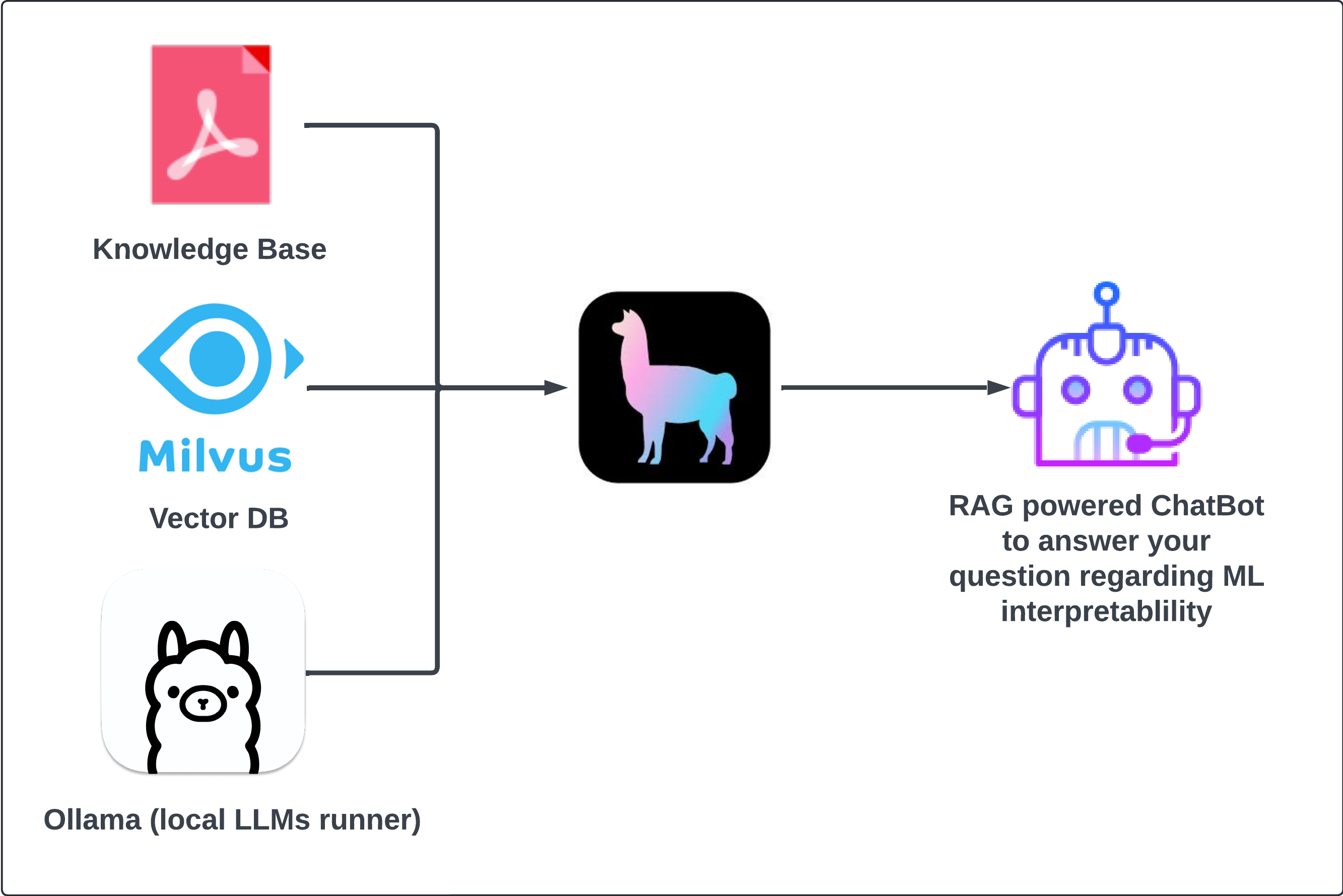

To build a RAG system, we need to build its retriever and generator. There are AI frameworks, like LlamaIndex that can help us build RAG piplines but we still need to choose our storage (Vector DB). This work builds the RAG-powered LLM application with Milvus and LlamaIndex.

We use Ollama to run open-sourced LLMs locally. Use LlamaIndex to process pdfs, handle chunking/chunking overlap, index documents into our Milvus Vector DB, and finally set query engine (retriever).

*Monitoring, Fine-tuning and Improvement (under construction)

These sections involve monitoring/improving the performance of RAG. Different retrieval methods including hybrid search, retrieval evaluation metrics, and LLMs will be tested. Useful tools: ragas

Live Demo

Knowledge base in demo: pdf